LiteLLM¶

Logfire supports instrumenting calls to the LiteLLM Python SDK with the logfire.instrument_litellm() method.

Installation¶

Install logfire with the litellm extra:

pip install 'logfire[litellm]'

uv add 'logfire[litellm]'

Usage¶

import litellm

import logfire

logfire.configure()

logfire.instrument_litellm()

response = litellm.completion(

model='gpt-4o-mini',

messages=[{'role': 'user', 'content': 'Hi'}],

)

print(response.choices[0].message.content)

# > Hello! How can I assist you today?

Warning

This currently works best if all arguments of instrumented methods are passed as keyword arguments,

e.g. litellm.completion(model=model, messages=messages).

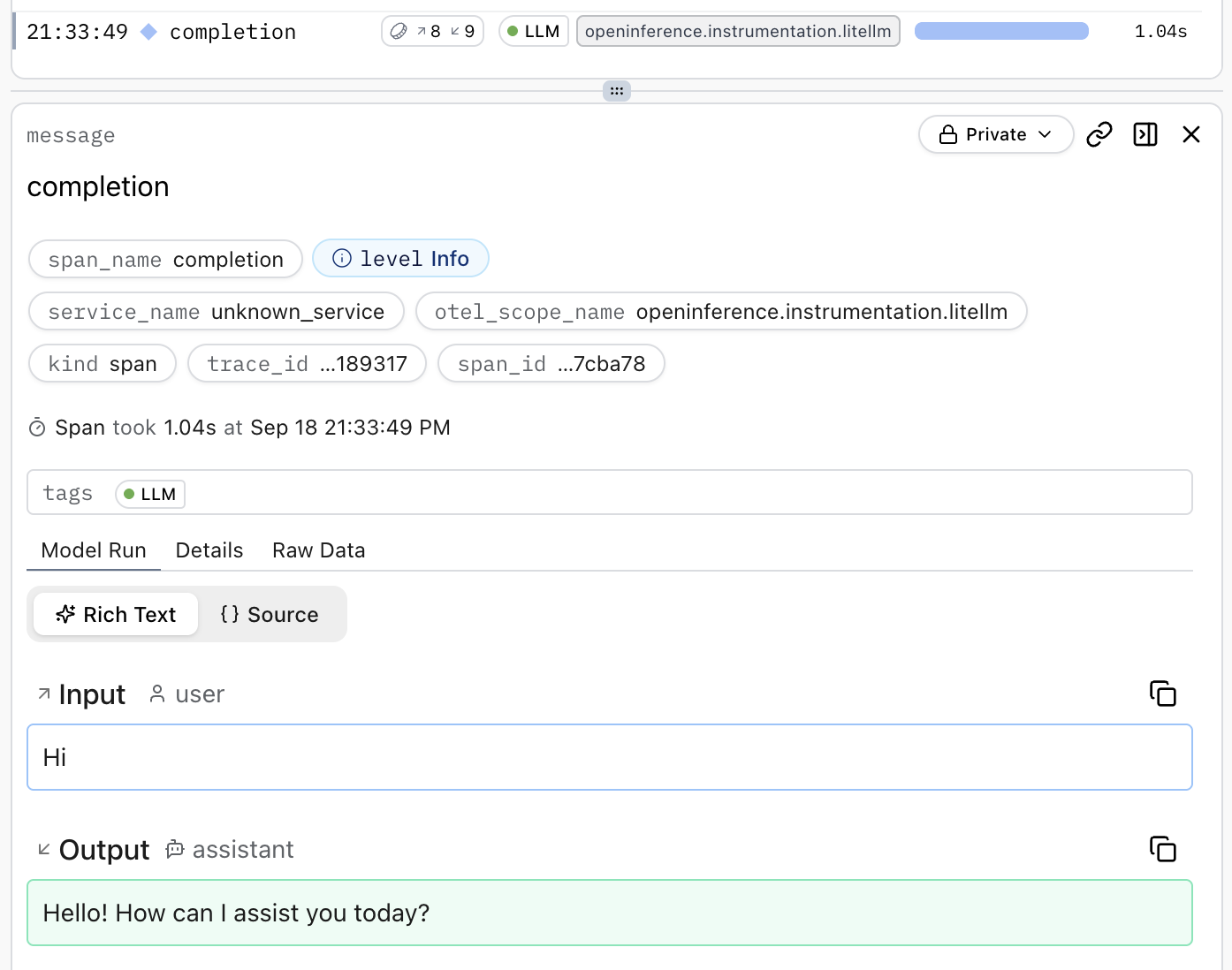

This creates a span which shows the conversation in the Logfire UI:

logfire.instrument_litellm() uses the LiteLLMInstrumentor().instrument() method of the openinference-instrumentation-litellm package.

Note

LiteLLM has its own integration with Logfire, but we recommend using logfire.instrument_litellm() instead.